SSD Reliability Issues

Significantly higher speeds and better performance – that’s the main reason why more users are starting to upgrade their rigs with Solid State Drives (SSD). Those who have gotten used to working with a computer with an SSD will no longer want to go back to just a hard disk. Plus, the prices of SSDs are dropping while the capacities and speeds are constantly increasing.

However, modern drives are plagued by the suspicion that they forget data as quickly as they can save it. This suspicion was fuelled from the very beginning by a basic drawback of Flash memories, which is the same technology all SSDs are based on. Each one of the memory cells in modern SSDs can only cope with 1,000 to 3,000 delete and write operations. While first-generation SSDs such as Intel’s X25 dispelled these misconceptions through an impressive distribution of the deterioration and a long service life, the next generation drew attention to itself due to its problems: Thanks to a firmware defect, the Sandforce-SF 2000 SSD controller, which was supposed to offer top-level performance at a reasonable price, managed to crash dozens of computers.

And SSDs using earlier versions of the Indilinx Barefoot controllers became strained under certain circumstances and caused Windows to freeze.

Short-lived Flash cells

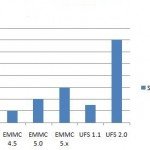

Manufacturers have managed to do away with such firmware problems, and the storage capacities of SSDs are on the rise. The cells are getting smaller and smaller in size, but each one can now store two or three bits instead of just one. That’s why new triple level or multi-level cells can only withstand between 1,000 (TLC) and 3,000 (MLC) write-operations, while single-level cells can cope with 100,000 write-operations.

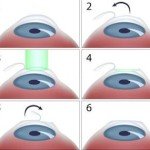

The reason for the Flash cells’ limited service lives lies in their functionality (refer to graph on next page). A cell saves its information based on the fact that its floating gate either is or is not charged. In order to charge the floating gate, a control voltage is applied at the control gate. Due to this control voltage, the electrons penetrate the floating gate’s thin insulation layer. The electrons even remain where they are in the absence of the control voltage.

In this charged condition, the floating gate generates an electric field that blocks the read current. Consequently the cell contains a digital zero. In order to write a digital one into the cell, a reversed control voltage is applied, which pulls the electrons through the insulation and out of the floating gate. When it is discharged, the floating gate allows the read current to flow, in which case the cell contains a digital one. The thin insulation layer around the floating gate is what limits the cell’s service life. It wears out whenever electrons tunnel through it. Over the course of time, its insulation capacity grows weaker and weaker. Furthermore, electrons get stuck in the layer, as a result of which it begins to counteract the control current. Consequently, the control current needs to be strengthened and applied for longer periods of time, which in turn speeds up the deterioration of the insulation layer and extends the duration of the write-operation until the cell becomes slow enough to slow down the drive, at which point the controller discards it. Thus, write-operations and delete-operations are the only things that shorten the service life. The problem is made worse by the fact that the memory cells are connected to each other such that it is only possible to delete entire blocks of 256- or 512KB. Consequently, each delete-operation that is carried out within the SSD triggers a series of deterioration-causing deletions. One of the most important tasks of the controller is to minimise this damaging >>Write amplification<< by pooling write-operations.

Regardless of the fundamental Flash-related disadvantages, the demand for larger capacity and more economical SSDs is compelling manufacturers to further enhance the data density. Modern drives store two or three bits per cell instead of just one. This is done with the help of various charging levels, to which certain combinations of bits are allocated. This results in a significant reduction in the voltage tolerances, which is necessary to compensate for the deteriorating insulation layers.The situation is aggravated further by the fact that the structure widths of the memory chips are being decreased. This does facilitate lower prices and ever-increasing capacities, but the insulation layer shrinks along with all the other structures, which makes it more vulnerable to deterioration.

Limited service life

Flash memory cells, which are used by all SSDs, can only withstand a limited number of write-operations. With the ever-increasing

storage densities of modern SSDs, this number is continuously decreasing.

Flash memory in SSD wears out

Flash cells store information in the form of electric charge. The insulating layer that the electrons have to penetrate during delete operations and write-operations keeps wearing out until a point is reached at which the cell contains no more charge.

Increasing storage density

Earlier SSDs used two voltage levels to store just one bit per cell. More voltage levels equate to more bits, but lower tolerances. This results in the cell becoming unusable a lot quicker.

This is how long SSDs last

The fear that SSDs would become unusable after a brief period of normal use turned out to be unfounded. Thomas Weiser, who is the storage product manager at the IT dealer Cyberport, has an overview of the return rates: “When it comes to SSDs, the return rate is minimally higher than the return rate for conventional hard drives. It has decreased in the last six months.”

How long the SSD is going to work also depends on the intensity of usage for which it was designed. The manufacturer usually depicts this through the duration of the warranty. The high-priced OCZ Vector 180 SSD has been designed to be compatible with a scenario in which write-operations associated with 50GB of data are carried out daily over a period of five years. Consequently, this SSD has a long warranty period. The cheaper OCZ Vertex 450 is designed to be compatible with a scenario in which write-operations associated with 20GB of data are carried out daily over a period of three years. That’s quite a lot, in terms of day-to-day usage, since the harmless read-operations are in preponderance. Those who wish to minimise the likelihood of problems coming up would be well advised to go for a model with a five-year warranty period. Furthermore, if the daily writing performance is maintained at a consistent level, the service life of an SSD that is twice as large is theoretically about twice as long as the service life of the smaller model.

Many SSDs store usage-related statistics such as information relating to the amount of data that has been written and the wear level indicator’. The CrystalDiskInfo tool can display these SMART values. However, the relative numbers, which decrease from 100 (new condition) to 0 (worn out), are abstract. The SSDLife tool uses this information to calculate the expected remaining service life. When the programme is launched for the first time, it notes down how much data has already been written – the longer it remains installed, the more precise the prediction.

However, many drives keep working for a while even after the SMART values predict the imminent demise of the SSD. Nevertheless, you should set up regular safeguards before the point in time which your SSD does eventually taps out.